Nail your Kubernetes migration

Humanitec enables fast and compliant Kubernetes migration at scale, following enterprise best practices.

3x

Faster K8s roll out

95%

Less config files

400%

Average increase in deployment frequency

Don't let Kubernetes migration kill your developer productivity

Build an easy-to-maintain IDP on top of K8s

Get rid of ticket ops

SEE it in action

An intuitive layer on top of Kubernetes

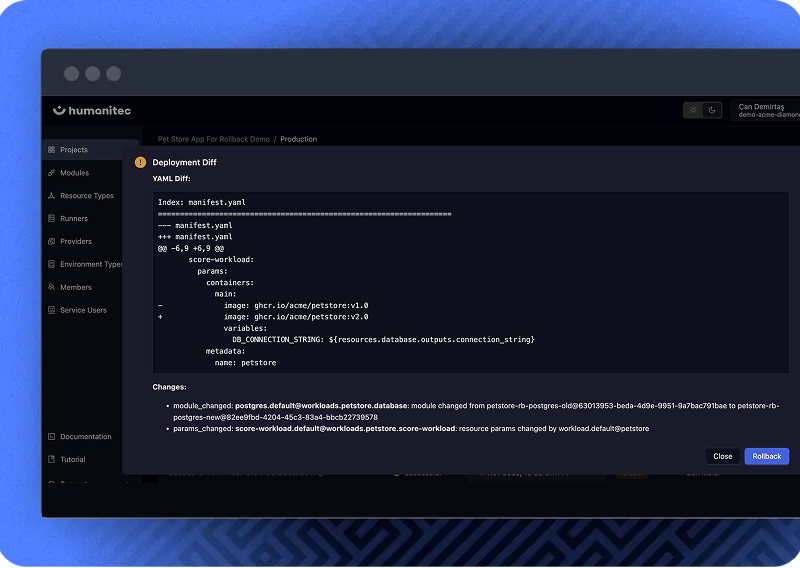

Know exactly what you’re rolling back

HOW IT WORKS

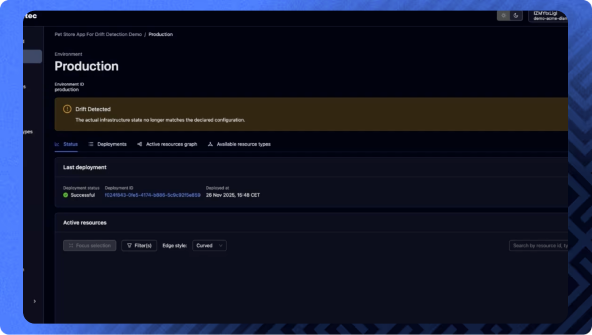

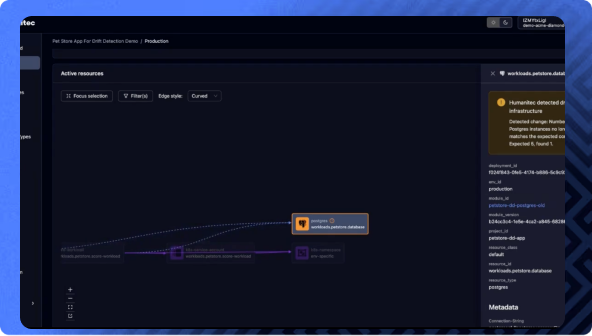

See drift. Understand it. Fix it.

Catch drift where you already monitor your systems

observability stack, so teams see issues

immediately.

Know exactly what’s affected

environment are out of alignment

See precisely what changed

node and configuration causing the drift, so teams

can resolve the issue fast and confidently.

Today

vs

With Humanitec

Today

vs

With Humanitec

See how you can build an IDP in under 30 minutes

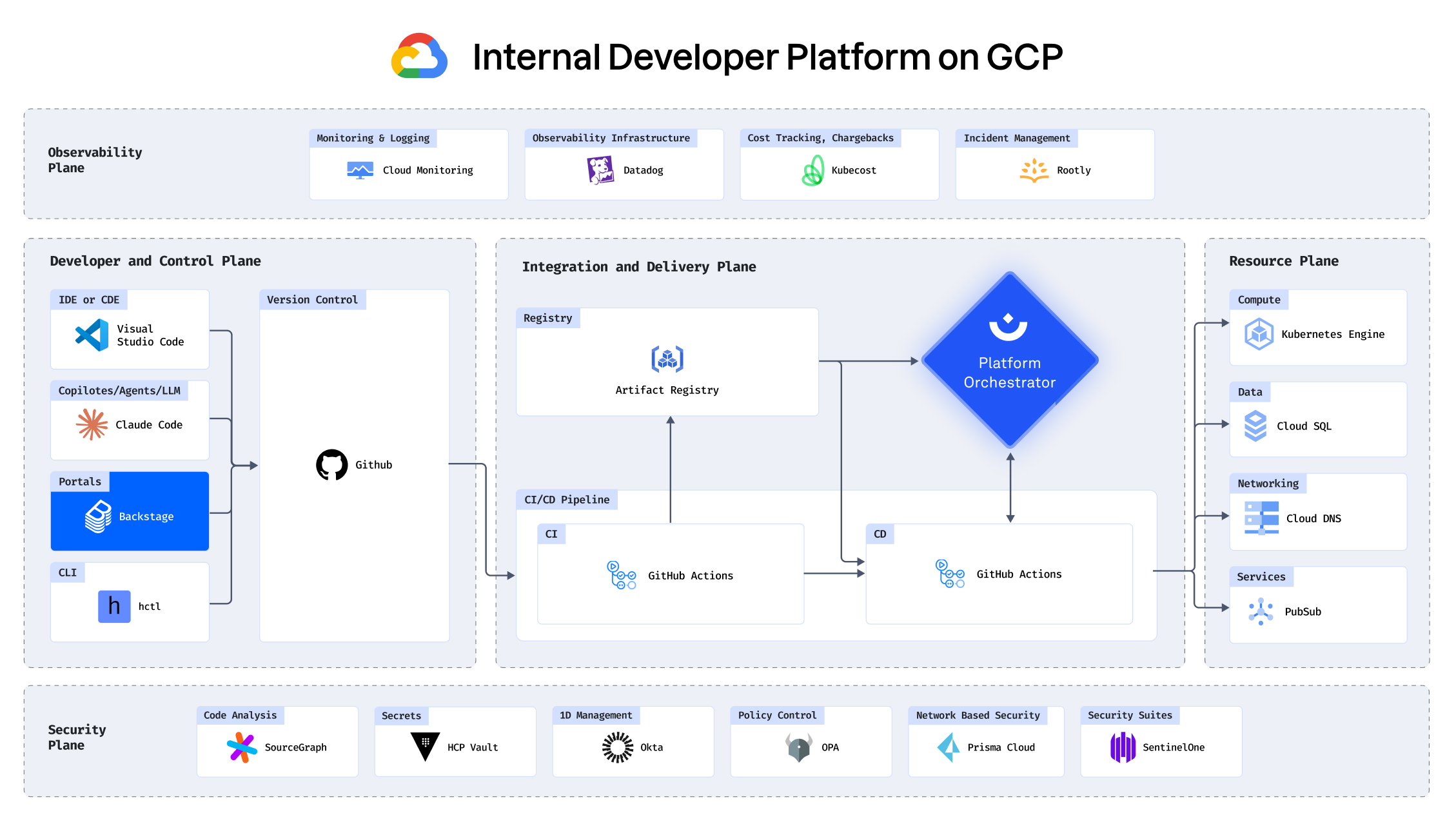

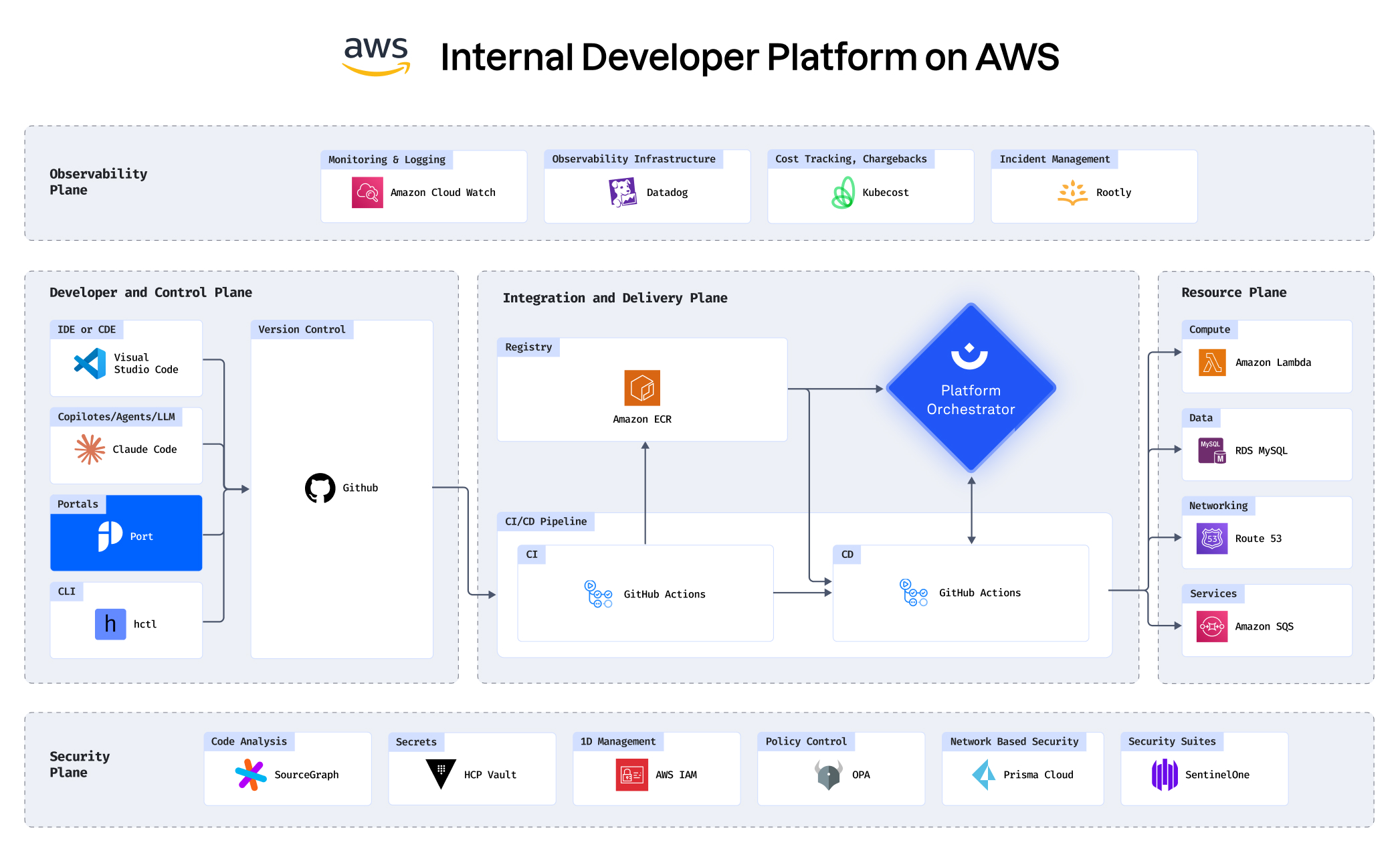

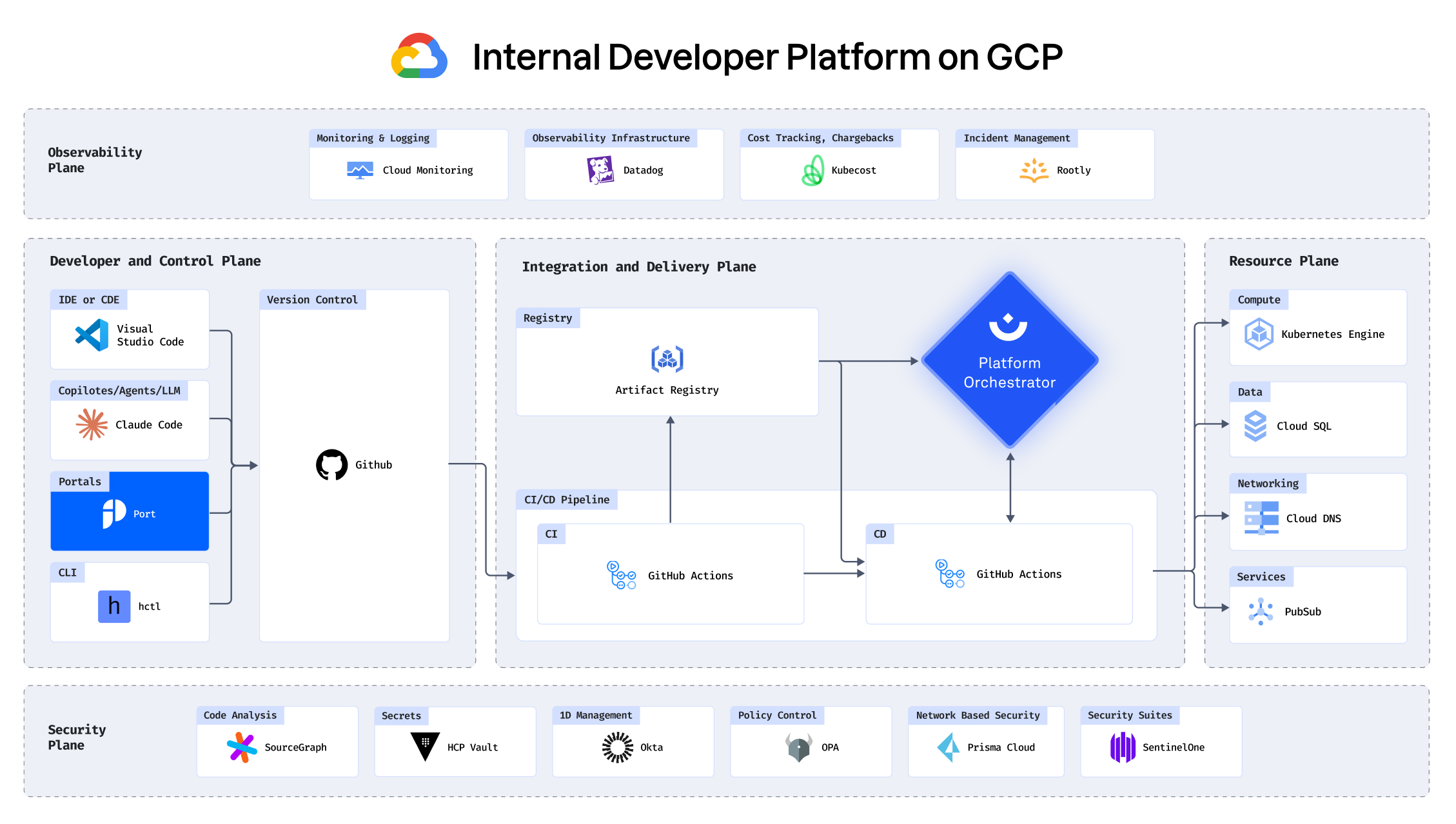

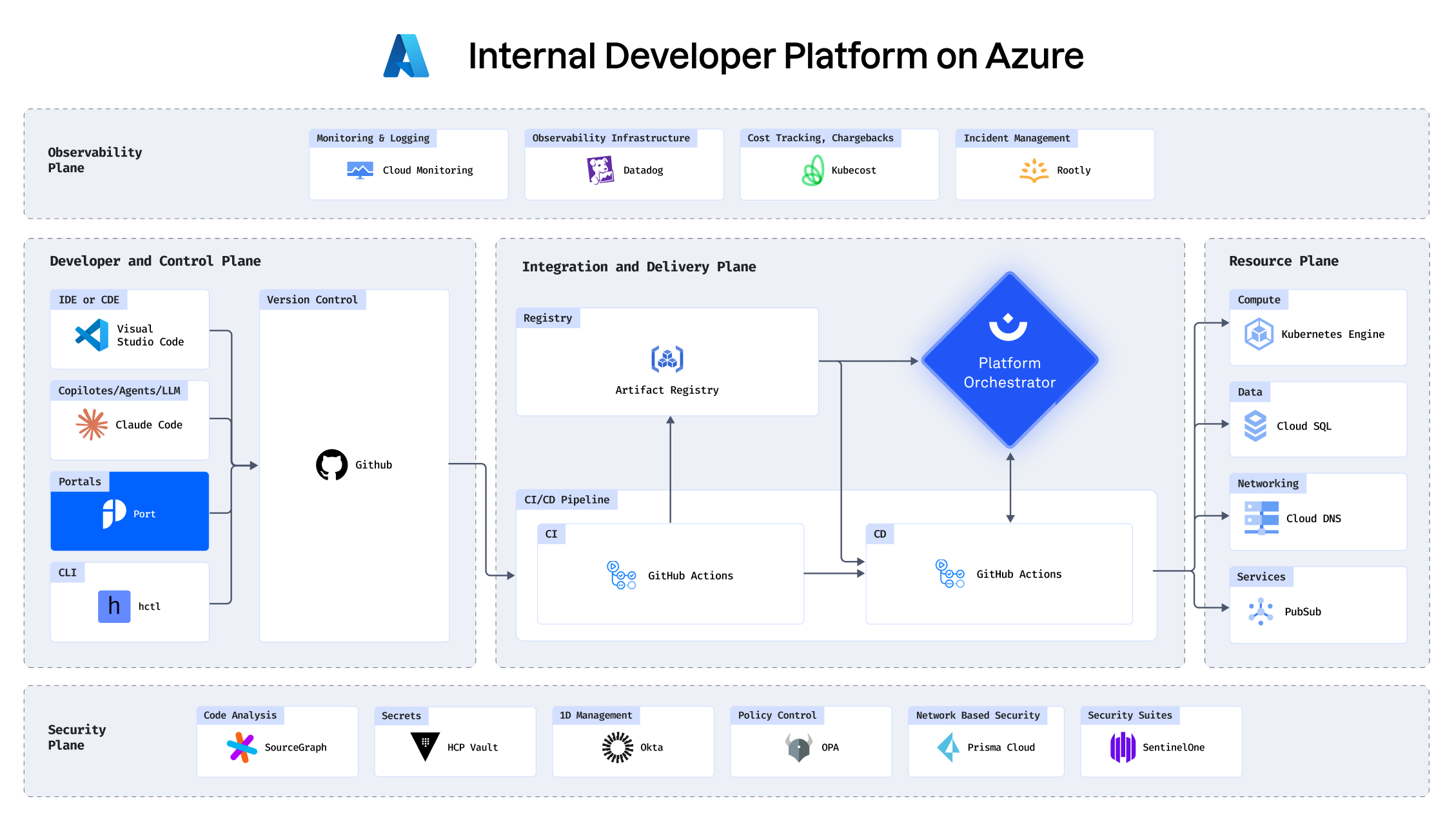

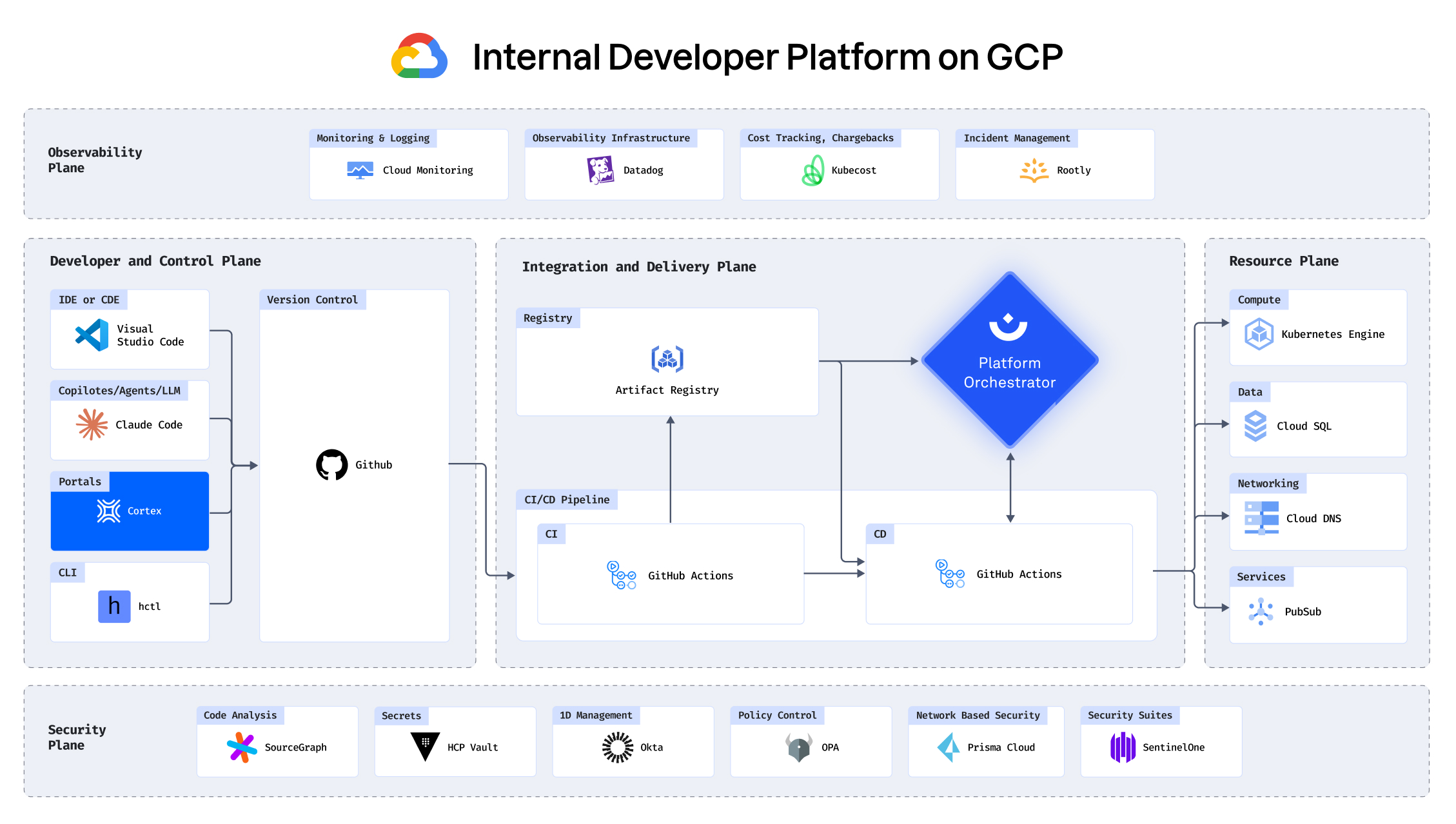

Building an IDP using GCP, Port, Humanitec, Datadog and

more

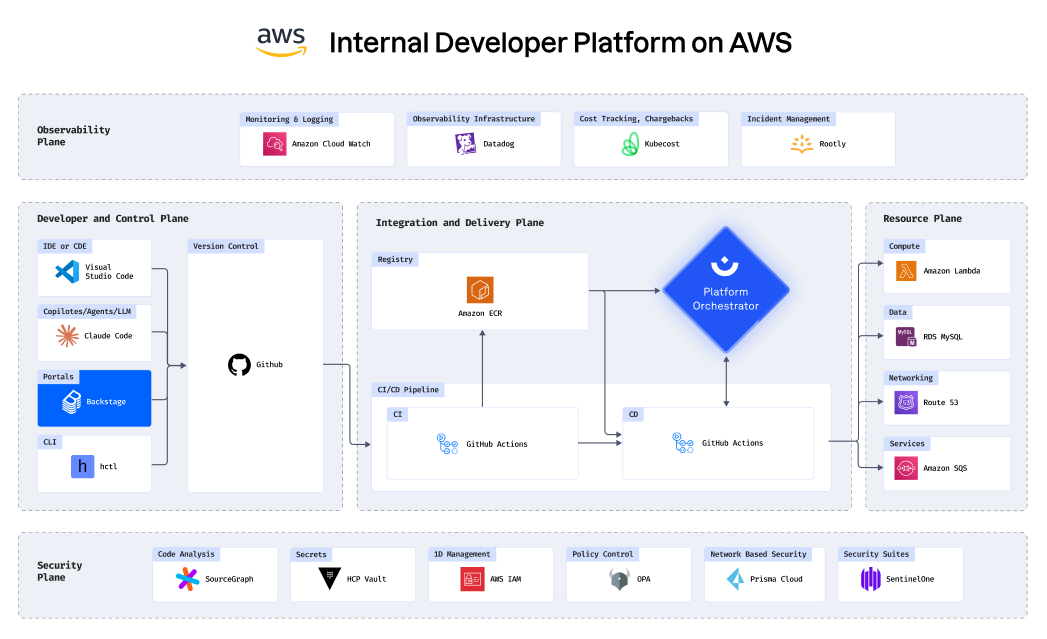

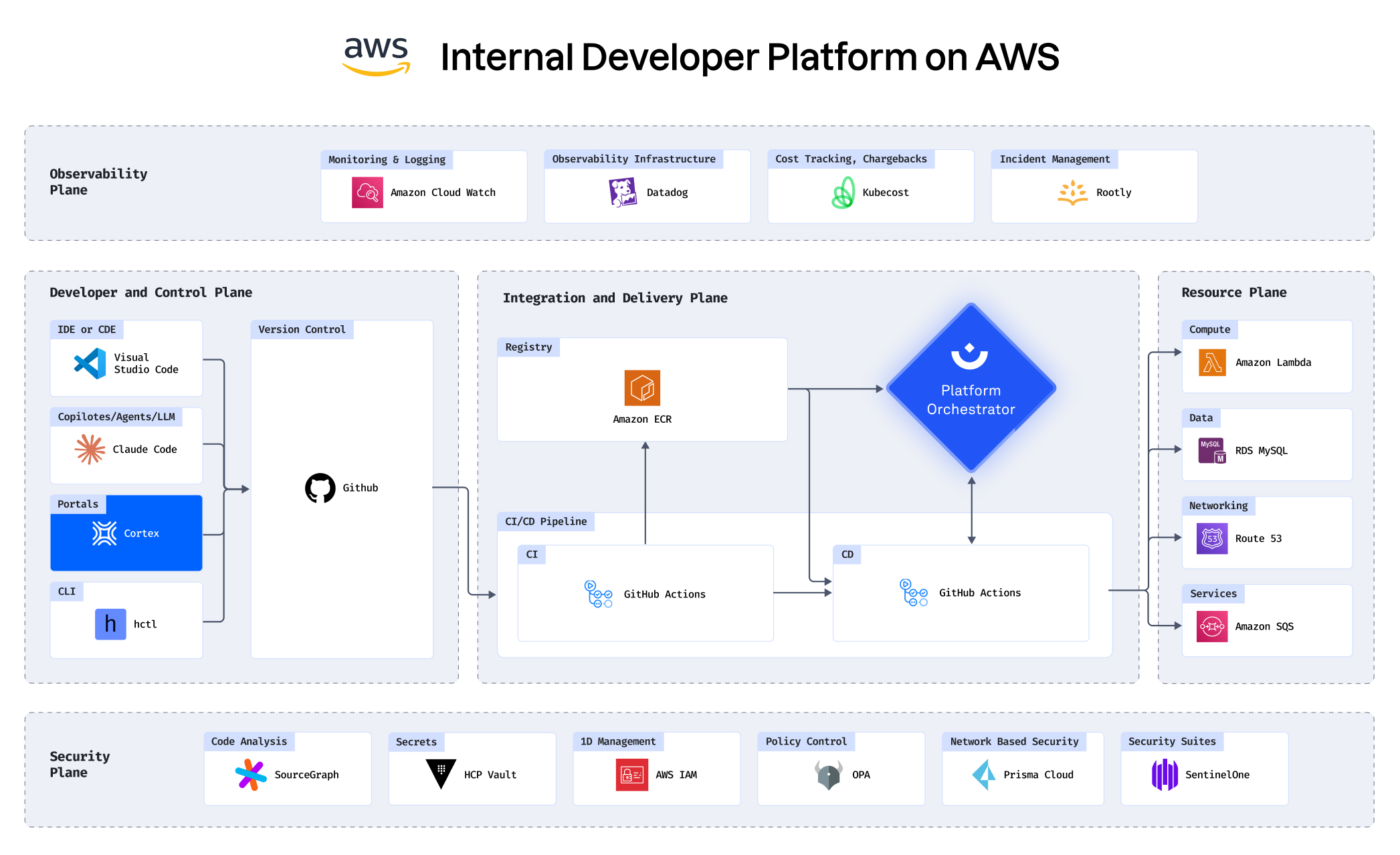

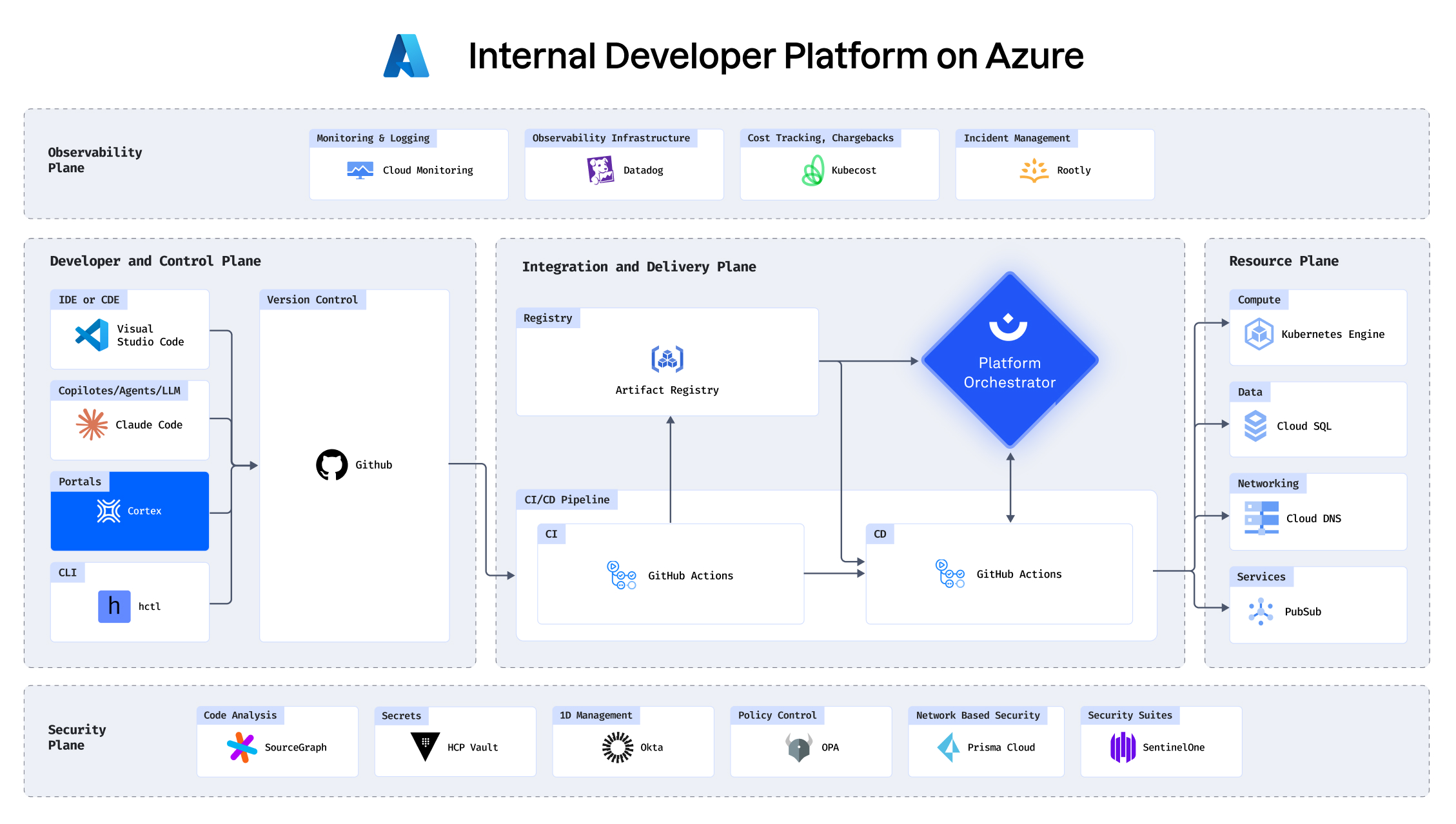

Building an IDP using AWS, Cortex, Humanitec, Datadog and

more

See how you can enable developer self service in 5 minutes:

See how Convera tamed K8s with Humanitec

On their journey to a state of the art cloud native setup, Convera’s team found themselves spending too much time getting into the weeds of Kubernetes.

Using the Internal Developer Platform built with Humanitec, developers no longer have to waste time figuring out K8s implementation details when promoting workloads from local to production.

Now they have the ability to choose their own level of abstraction and self-serve what they need to run their workloads on top of K8s, without waiting on Ops.

With Humanitec, our developers don't need to touch Kubernetes unless they want to. This eased a lot of pressure from the team, allowing them to focus on what they do best — creating amazing solutions for our customers

Dive deeper